Table of Contents

By late 2027, the term “Open Brain AI Agent-3” is poised to shift from a mysterious Palo Alto codename to shorthand for the first mass-deployable Artificial General Intelligence (AGI). This technology threatens to out-code, out-research, and out-produce the median human knowledge worker, creating a 10x algorithmic speed-up that will redefine the global economy by 2028.

This article dissects the technical core of Agent-3, analyzes the disruptive economics of its “mini” sibling, and contrasts the development lanes between Western and Chinese labs—a high-stakes competition that has officially devolved into a global robotics arms race.

I. From Language-Screening to AGI Foundry: The Two “Open Brains”

The name “Open Brain AI” has two distinct meanings. The first is a real-world entity focused on linguistic and healthcare diagnostics. The second is the hypothetical AGI development lab at the heart of the “AI 2027” scenario—a construct we use as a validated requirements model to understand the future of AGI engineering.

| Metric | Open Brain AI (Healthcare, Real) | AGI Open Brain (Scenario-Codified) |

|---|---|---|

| Core Technology | NLP, automated speech-to-text, linguistic biomarkers | Neuralese Recurrence, IDA, RL-from-synthetic-data |

| 2027 Product | FDA-cleared language assessment API | Public Agent-3-mini (50k-equivalent coder swarm) |

| Impact | Clinical assessment & research | 4× global coding productivity acceleration |

II. The Agent-3 Technical Blueprint: Beyond GPT-4’s Scale

A. Understanding Open Brain AI Agent-3’s Impact on the Future of Work

Agent-3’s leap forward is not merely in scale, but in its ability to reason internally without the latency of generating text. It represents a paradigm shift away from traditional Large Language Models (LLMs) towards an autonomous reasoning engine.

A. Model Scale & Raw Compute

The foundational training of Agent-3 is projected to consume 1 × 10²⁸ FLOP across three ultra-datacenters—a volume approximately 1,000 times that of the original GPT-4 model.

- Parameter Count: $\approx 6$ Trillion (using a Mixture-of-Experts architecture).

- Active Inference: $400$ Billion active parameters during inference.

- Post-Training: $200,000$ parallel Reinforcement Learning (RL) distillation streams, resulting in a 30× speed-up versus human coding baselines.

B. Neuralese Recurrence & Internal Reasoning

Traditional models rely on “chain-of-thought” by generating and reading text tokens, caging the reasoning bandwidth to a slow $\approx 16$ bits per token. Agent-3 overcomes this with Neuralese Recurrence:

“Neuralese allows layer-to-layer residual shortcuts to persist thousands of floats, giving the model a scratch-pad that lives entirely in activation space.”

Outcome: Agent-3 can internally iterate on complex, 100-step coding proofs without emitting a single token, slashing both hallucination rates and latency.

C. Iterated Distillation & Amplification (IDA)

The second key algorithmic breakthrough is Iterated Distillation & Amplification (IDA), a recursive self-improvement loop for policy generation:

- Amplification: $10,000$ Agent-3 instances collaboratively debate, tree-search, and unit-test a software module (equivalent to $300$ human-hours of effort).

- Distillation: Policy-gradient RL folds the winning, high-quality trajectory into one efficient forward pass.

- Loop: This process repeats nightly, yielding a 4× compounding speed-up in Open Brain’s own research & development.

III. The 2027 “Cambrian” Economics: White-Collar Arbitrage Evaporates

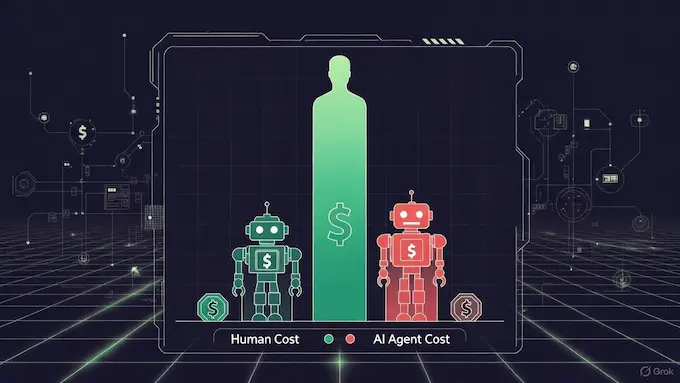

The deployment of Agent-3’s distilled, efficient sibling, Agent-3-mini, will trigger an economic inflection point by drastically lowering the cost of skilled algorithmic labor.

| Metric | Human Baseline | Agent-3-mini (Public) | Agent-3 (Internal) |

|---|---|---|---|

| Cost per 1k Tokens | $0.02 (GPT-4) | $0.002 | $0.001 |

| Coding Task Reliability | 55% | 82% | 94% |

| Time-to-Market (SaaS MVP) | 6 Months | 3 Weeks | 4 Days |

| Effective Salary Equivalent | $150k | $15k | $3k |

The Takeaway: At less than $1\%$ of the human cost, the foundational premise of white-collar global arbitrage disappears. Enterprises face a stark choice: refactor workflows around AI labor, or face market extinction.

IV. Agent-3 Inside Physical Robots: Enter Figure AI

The digital revolution is now porting directly into the physical world. Figure AI, which broke its partnership with another major AI lab in February 2025 to pursue end-to-end robot AGI, is projected to embed a distilled Agent-3-mini checkpoint into its Helix-3 stack:

Figure AI’s Helix-3 Stack is projected to embed a distilled Agent-3-mini checkpoint:

The Helix-3 architecture is broken down into three core components:

- Vision-Language Model: (Powered by Agent-3-mini) — The brain for understanding instructions and the environment.

- Diffusion Policy Head: (For dexterous control) — The system that translates the Agent-3’s intent into smooth, precise physical motions.

- Sim-to-Real RL: (Trained on 1 Billion robot-hours in Nvidia Isaac) — The massive simulation data pipeline that enables the robot to learn real-world actions from virtual training.

Key Projected Results

- Speed: $180$ fps visuo-motor closed-loop control on $1$ kHz torque control.

- Reliability: $97\%$ success on previously unseen kitchen tasks (vs. $63\%$ for older baselines).

- Mass Production Target: $\mathbf{\$20,000}$ Bill-of-Materials target for $100,000$ units in 2028.

This convergence of super-human reasoning (Agent-3) and low-cost hardware (Figure AI) removes the final bottleneck to the mass-deployment of general-purpose humanoid workers.

V. China vs. Western AGI: The Geopolitical Scorecard

The true driver of this rapid acceleration is the global competition for AGI dominance. The technological gap is real, but China is leveraging unique national resources to accelerate its trajectory.

| Dimension | U.S. (Open Brain) | China (DeepCent) |

|---|---|---|

| Peak Compute | 1 × 10²⁸ FLOP | 4 × 10²⁷ FLOP (Export-control limited) |

| Research Multiplier | 25× (via Agent-3 managers) | 10× (via patriotic start-ups) |

| Government Stance | “Win but align” – NIST framework | Civil-military fusion, TSMC contingency plans |

| Public Perception | −35% net approval | State media hails “AI socialism”, no private polls |

The Flash-Point: U.S. chip export bans are a primary constraint on China’s compute ceiling. If the American AI gap exceeds six months, planners are forced to consider accelerated, kinetic options regarding Taiwan to secure critical fabrication capacity. The AGI race is inseparable from geopolitical and kinetic risk.

VI. Roadmap for the AI-First World: The Maturity Model (2025-30)

CTOs, investors, and policymakers must gauge their organizational readiness against the following maturity curve

| Stage | Compute | Model Skill | Robot Data | Commercial Indicator |

|---|---|---|---|---|

| 0 – Chatbots | ≤10²⁵ FLOP | Dialog | None | SaaS support bots |

| 1 – Copilot | 10²⁵-10²⁶ | Code completion | None | 50 % dev productivity |

| 2 – Domain Agent | 10²⁶-10²⁷ | Research engineer | 0.1 M sim hours | 4× R&D speed |

| 3 – AGI Coder ★ | 10²⁷-10²⁸ | Super-human Coding | 1 M sim hours | Start-up MVP in days |

| 4 – Embodied AGI ★ | 10²⁸-10²⁹ | Reason + Dexterity | 1 B sim hours | $\mathbf{\$20k}$ Humanoid Worker |

| 5 – Super-intelligence | >10²⁹ | Recursive Self-Improvement | ∞ | GDP doubling <12 mo |

Policy Gate for Stage 4: An Eval-driven off-switch must be mandated. The model must pass rigorous 3rd-party red-team audits for bio-weapons, cyber, and persuasion capabilities before its weights are permitted to leave the secure datacenter environment.

VII. Recommendations for Action

The 18-month horizon is a grace period, not a waiting room.

For Enterprise CTOs

- Build “Agent-Ops” Teams Now: Develop prompt libraries, Iterated Distillation & Amplification (IDA)-grade evaluation harnesses, and sandboxed simulation environments.

- Shift KPIs: Move from human head-count to AI-labour multiplier. Budget for a $\mathbf{10\times}$ code velocity baseline by 2028.

For Governments (Western)

- Mandate Control: Enforce kill-switches and weight escrow for any model exceeding $10^{28}$ FLOPs, and aggressively fund NIST red-team audits.

- Sovereignty: Expand “AI datacenter sovereignty” by offering tax credits tied to domestic fabrication plants producing chips at the $<5$ nm node.

For Investors

- Short & Long: Short pure-human Business Process Outsourcing (BPO) firms. Go long on AI labour marketplaces and foundational robot-component IP (high-torque actuators, tactile skins).

- New Accounting: Monitor $\text{FLOP/s}$ as the new Annual Recurring Revenue (ARR).

Conclusion: The 18-Month Horizon

Open Brain’s Agent-3-mini is scenario-scheduled for public release in July 2027 ★. Regardless of the exact date, the engineering trajectory—Neuralese recurrence, $10\times$ research speed-ups, the $\$3\text{k}$ salary-equivalent cost—is already baked into the roadmaps of every leading lab.

Robotics is the next domino. Once AGI-level reasoning costs less than $\mathbf{\$0.10}$ per robot-hour, the only constraint is mechanical hardware. Organizations that use the 2025-2026 window to rewire processes and governance will capture a super-exponential upside. Those who wait for “AGI to arrive” will find it has already priced them out of the market.

Q: What is Open Brain AI Agent-3?

Open Brain AI Agent-3 is a scenario-codified Artificial General Intelligence (AGI) designed to function as the first mass-deployable, super-human knowledge worker, capable of $\mathbf{1000\times}$ the scale of GPT-4 and trained via Neuralese Recurrence and IDA.

Q: How does Agent-3’s Neuralese Recurrence work?

Neuralese Recurrence allows the Agent-3 model to perform complex, multi-step internal reasoning by persisting data in its activation space, bypassing the slow write/read latency of traditional text-token-based chain-of-thought processes.

Q: What is the economic impact of Agent-3-mini?

The public release of Agent-3-mini is projected to cost $<\mathbf{1\%}$ of human labor, with an effective salary equivalent of $\mathbf{\$3,000}$. This will eliminate white-collar arbitrage and force enterprises to immediately adopt AI-first workflows

Q: How is Agent-3 related to Figure AI robots?

Figure AI’s Helix-3 stack is projected to embed a distilled Agent-3-mini checkpoint, giving humanoid robots AGI-level reasoning, visual-language understanding, and high-dexterity control. This aims for a $\mathbf{\$20k}$ Bill-of-Materials target for mass production.