Table of Contents

1. Introduction: The Robot That Sees More Than Shelves

If you have walked into a supermarket lately, you might have noticed a tall, camera-laden

robot cruising the aisles. It glides past cereal, swivels toward condiments, and pauses at empty facings. It looks focused on products. It is also a sensor platform in a dense human environment human also demand Privacy Retail Inventory Robots That detail matters.

Retail inventory robots deliver measurable wins. They cut out-of-stock rates. They catch price label mismatches. They hand store teams a living map of what is on the shelf right now, not last Tuesday. The catch is simple. To see the shelf, the robot must see the world around the shelf. That means people. Faces. Gait. Clothing with logos. Hands holding a phone. Bags with addresses. An employee badge. All of this can land in a raw frame or a cached video. That is where privacy begins.

This piece explains how inventory robots capture data, what gets stored, who gets access, and how to build systems that are both effective and respectful. Human always demand Privacy Retail Inventory Robots, and we will put it to work with grounded practice, not vague platitudes.

2. How Inventory Robots Actually Work

2.1 Hardware, Optics, And Field Of View

Most retail inventory robots use a mast or panoramic camera array roughly at eye level. Wide lenses capture entire bays with enough resolution to read barcodes, shelf labels, and planogram markers. Many units add depth sensors for distance and LiDAR for navigation. Some deploy RFID wands in high shrink categories. Microphones are rare for inventory use, but some platforms keep an ambient audio channel for collision or safety cues. The robot’s field of view always overlaps with people. That is unavoidable in active stores.

2.2 On-Board Perception And The Shelf Brain

Raw frames feed a compact model zoo. You will typically find text detection, barcode detection, price tag recognition, product recognition by packaging features, and planogram alignment. Depth and pose estimation help the robot stay centered and maintain legal clearances. Preprocessing steps crop, deskew, and sharpen regions that look like labels. Most vendors now run a two-stage approach. A small model filters for candidate regions to keep bandwidth low. A larger model, local or remote, resolves hard cases like near-duplicate product variants.

2.3 Data Flows, From Capture To Action

The pipeline looks like this.

- Capture. The robot records frames and metadata, time, location, aisle, bay.

- Preprocess. The system crops regions of interest and discards the rest if a retention policy allows it.

- Infer. Models output shelf gaps, mispriced tags, and misplaced items.

- Sync. Results, and sometimes raw crops, sync to a store server or a cloud endpoint.

- Act. Store associates receive tasks on handhelds. Refill. Correct a tag. Move a product.

- Learn. The system uses human confirmations to retrain product recognizers and tune thresholds.

Privacy lives inside steps one through four. That is where image retention and access policies either keep people safe or leave gaps large enough to drive a shopping cart through.

3. What Gets Collected, Retained, And Accessed

3.1 Primary Inventory Data

This is the data you intend to capture, such as label images, product facings, counts, shelf coordinates, and confidence scores. It is sensitive only if linked to specific employees by name or tied to customer identifiers. Most of it can be kept in aggregated form with low risk.

3.2 Incidental Human Data

This is the risky part. Shoppers and staff wander into frame. A child peers around the robot. A cashier walks by with a name badge. A customer opens a wallet near a shelf camera. Incidental human data includes faces, bodies, clothing, tattoos, disability aids, and personal items. It can be biometric or quasi-biometric, and it can reveal protected characteristics. Treat it as personal data by default.

3.3 Derived Behavioral Signals

Engineers love signals. Models can infer dwell time near a product, traffic flow through an aisle, or reach patterns toward high shelves. With enough resolution and time alignment, you can connect behaviors to a person across frames. That turns an innocuous shelf camera into an analytics apparatus. If you choose to compute behavioral signals, you own the privacy risk. Design for minimalism.

3.4 Typical Retention Patterns

Left unchecked, raw video sticks around for weeks because storage is cheap and engineers like to “keep it for debugging.” That habit creates unnecessary exposure. Mature deployments set three staggered windows.

- Ephemeral Buffer, minutes to hours. For collision or incident review only. Auto purge.

- Working Set, hours to days. Crops and thumbnails tied to open tasks for human verification.

- Anonymized Corpus, days to months. Redacted images and synthetic renderings used to improve models.

3.5 Who Gets Access

Access usually spans four groups.

- Store Operations. Associates and managers view tasks, not raw feeds.

- IT And Security. A small admin group can access logs for incidents.

- Robotics Vendor. Support engineers get scoped access to anonymized crops. Raw frames require a formal incident ticket.

- Analytics Partners. Rare and tightly controlled. They receive aggregated, de-identified data with contractual limits.

If you cannot list your access groups on one page, your program is too loose.

4.Privacy Risks That Matter In The Real World .

4.1 Re-Identification Via Background Cues

Even if you blur faces, a combination of clothing, time, and purchase pattern can re-identify someone, especially in small communities. Background text, QR codes on parcels, or a loyalty card flash can also undo redaction.

4.2 Model Drift And Bias

Product recognizers trained on clean lab data fail under new lighting, promotional shippers, or seasonal packaging. Engineers then pull more store footage to fix accuracy. Retention creeps. If you do not audit that loop, you end up warehousing human images you never meant to save. Bias enters when staff oversight concentrates in specific neighborhoods or times of day, which can skew attention and enforcement.

4.3 Data Leaks And Misuse

The most common leak is the simplest. A developer exports a debug folder to a personal device. The second most common is a poorly configured cloud bucket that hosts raw crops. Rare but serious incidents include third parties using inventory cameras to build shopper recognition. Write policies that assume ordinary mistakes, not movie-plot attackers.

4.4 Chilling Effects For Shoppers And Staff

Constant sensing changes behavior. Shoppers avoid aisles that feel observed. Employees feel graded on their every move. You will feel that pain in retention and sales long before you read it in a legal brief.

5. Design Principles For Privacy Retail Inventory Robots

5.1 Minimize By Design

Collect only what you need to keep shelves accurate. You do not need high frame rate video to detect a missing box. Capture stills at controlled intervals. Prefer tight crops over full frames. Disable audio unless a documented safety case requires it.

5.2 Compute At The Edge

Run detection on the robot. Emit structured findings, product id, bay, confidence. Retain raw pixels only when a label is unreadable and a human must check it. Edge processing reduces bandwidth, shrinks the attack surface, and keeps sensitive pixels out of your cloud.

5.3 Redact Before Storage

Apply face blurring and badge redaction on the device. Do not ship identifiable frames to central storage. For belt and suspenders, run a server-side redaction pass as well. Use unit tests that fail builds if the redactor misses benchmark cases, children at cart height, reflective surfaces, posters with faces, and mirrored coolers.

5.4 Separate Keys From Content

Encrypt data in transit and at rest. Hold keys in a separate service. Rotate keys on a predictable schedule. Make key scope granular enough that a single leak does not open the whole lake.

5.5 Short Retention, Long Audit

Keep pixels briefly. Keep logs longer. Engineers often flip that logic. You want the opposite. Pixels create personal risk. Logs create accountability. Store cryptographic hashes of files and redaction manifests for audit without keeping the original images.

5.6 Human-Centered Transparency

Shoppers deserve to know what the robot does. Use clear signage at entrances and on the robot body. Describe what is captured, why, and for how long. Publish a short URL that resolves to a readable policy. Add a QR code. Provide an email address for data requests. Train associates to answer basic questions without deflection.

6. A Practical Policy Blueprint

Use this as a starting point. Keep it short enough that store managers will actually read it.

Purpose. The robot captures shelf images to detect out-of-stocks, mispriced items, and misplaced products. It does not capture audio. It does not perform facial recognition.

Collection. The robot captures still frames and crops related to shelf labels and products. People may appear incidentally.

Edge Processing. Detection runs on the robot. Faces and badges are blurred on device before upload.

Retention. Ephemeral buffer up to 2 hours, auto purge. Working set up to 48 hours for task verification. Redacted training corpus up to 60 days, reviewed monthly. No indefinite storage of identifiable images.

Access. Store operations see only task summaries and redacted crops. Vendor support can access redacted data for debugging with a time-boxed ticket. Raw unredacted frames require executive approval and a documented incident.

Security. Encryption in transit and at rest. Keys managed separately. Access logged. Quarterly review.

Rights. Shoppers and employees can request access to or deletion of images that include them. Requests acknowledged within a business week and fulfilled inside a defined window.

Governance. A cross-functional privacy board reviews metrics, incidents, and audit logs. Metrics include redaction precision, retention compliance, access exceptions, and response time to data rights requests.

7. Engineering Patterns That Keep You Honest

7.1 Privacy Gates In The Build Pipeline

Bake privacy checks into continuous integration. A pull request that changes image processing must run a redaction test suite. Fail builds that increase retained pixel area, enlarge frame buffers, or add new personally revealing cues. Use a budget model. If a developer wants to keep more pixels for a feature, they must remove pixels elsewhere.

7.2 Immutable Ledger For Access

Every time someone opens a frame, write a signed entry with who, why, and when. Use a tamper-evident log or a managed append-only service. Send a weekly digest to the privacy board. Engineers behave better when they know that a human will read the log.

7.3 Event-Driven Deletes

Do not bury deletes in cron jobs that people forget to maintain. Tie them to lifecycle events. Task closed. Ticket closed. Retention horizon reached. When the event fires, delete the pixels and write a proof record with file hash, timestamp, and job id.

7.4 Synthetic Data Over Human Images

For model improvement, prefer synthetic shelf scenes. Use generative tools to produce diverse lighting, occlusion, and packaging variants. Mix with tightly redacted human-free crops. Your model will improve without expanding human exposure.

7.5 Privacy-First Telemetry

You still need observability. Build metrics on counts and distributions, not on images. For example, report the number of unreadable labels by aisle, the false positive rate for out-of-stocks, and the proportion of frames that required human review. Avoid storing samples “for later.” Engineers will look at them “for debugging,” and that is how retention policies erode.

8. Legal And Ethical Guardrails, In Plain Language

8.1 Data Minimization And Purpose Fit

Collect the least data that does the job. Use it only for inventory accuracy and safety. Do not repurpose it for marketing, security profiling, or staff scoring without a separate program, separate consent, and separate controls.

8.2 Consent And Notice In A Store

You cannot pop up a consent dialog for every shopper. You can provide meaningful notice. Signage at entrances. Badging on the robot. A readable policy page. An opt-out channel for images that include a specific person, subject to feasibility and safety.

8.3 Rights To Access And Erasure

Build a workflow to find a person in the data. That means searchable capture windows, store locations, and robot route maps. If you cannot locate a person’s images upon request, you have collected more than you can responsibly manage. Keep processes small and predictable.

8.4 Vendor Management

Your privacy posture is only as strong as your weakest contractor. Flow down retention, access, and redaction requirements into vendor agreements. Do not allow sub-processors without written approval. Run test requests through your vendor to verify that they can respond.

8.5 Bias And Fairness

Fairness is not only a property of people detection. It shows up in how you deploy robots. If you patrol some neighborhoods more heavily than others, or always scan during certain shifts, you skew attention. Publish a deployment schedule. Balance coverage. Report aggregate accuracy by location to catch patterns.

9. Transparency That Builds Trust

9.1 Signage That Speaks Like A Human

Say this, or something close.

“Inventory robot at work. It takes pictures of shelves to find missing or mispriced items. People may appear in images by accident. Faces and badges are blurred before storage. Images are deleted after short periods. Scan this code for details or email privacy@yourstore.com.”

9.2 The Store Associate Script

Give associates a clear one-liner.

“The robot helps us keep shelves accurate. It blurs faces on device and deletes images quickly. You can read the details here, or I can help you get in touch with our privacy team.”

9.3 Publish Metrics, Not Promises

Once a quarter, publish a simple privacy report. Include average retention times, number of access exceptions, number of deletion requests, and redaction accuracy measured on a held-out test set. Real numbers beat glossy claims.

10. Security Basics That Prevent Real Incidents

10.1 Role-Based Access, Not Shared Accounts

Personal accounts only. No shared logins for “robot support.” Disable accounts when roles change. Rotate credentials on a schedule.

10.2 Segmented Networks

Robots live on a segmented network with strict egress rules. Only necessary endpoints are whitelisted. No direct access to the public internet. No peer to peer device traffic without explicit approval.

10.3 Strong Defaults

Disable storage of raw streams by default. Disable debug modes outside test stores. Require a signed waiver to enable extended logging for a short window, with automatic rollback.

10.4 Attack Surface Reduction

Remove services you do not use. Close ports. Avoid remote shells on robots in production. Use signed firmware and secure boot. Keep a golden image and use reproducible builds.

10.5 Incident Response That Works On A Tuesday

Run drills. Simulate a lost laptop with cached crops. Simulate a misconfigured bucket. Simulate a vendor requesting raw frames. Measure time to containment, communication, and deletion.

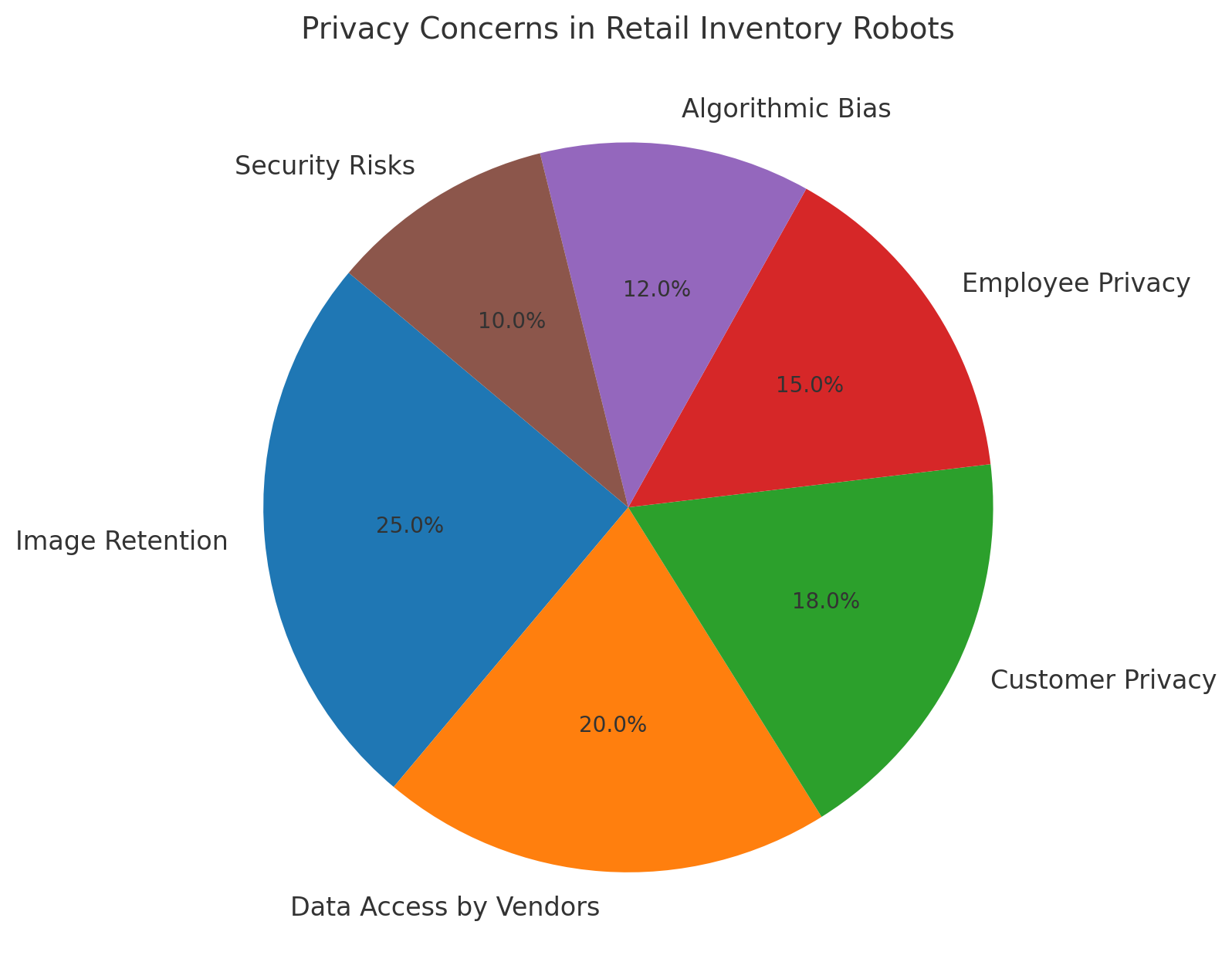

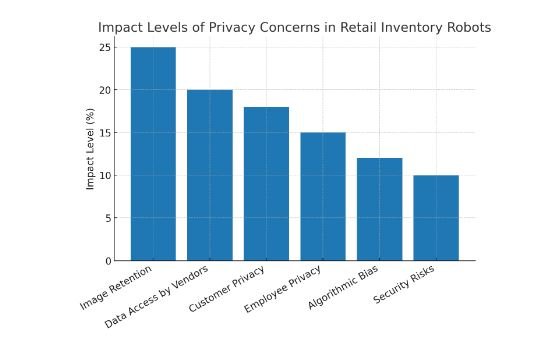

11.visual representation with bar graph and pie chart:

Comparison:

| Privacy Concern | Impact Level (%) |

|---|---|

| Image Retention | 25% |

| Data Access by Vendors | 20% |

| Customer Privacy | 18% |

| Employee Privacy | 15% |

| Algorithmic Bias | 12% |

| Security Risks | 10% |

11.2 visual representation with bar graph

12. A Step-By-Step Rollout Plan For Ethical AI In Retail

12.1 Phase One, Policy Before Pixels

Write and approve your policy blueprint. Pick retention windows. Define access roles. Choose your redaction stack. Configure edge processing. Only then turn on capture.

12.2 Phase Two, Pilot In A Single Store

Run in one store for thirty days. Track accuracy, redaction precision, retention compliance, and access logs. Interview shoppers and staff. Publish the findings internally.

12.3 Phase Three, Harden The Pipeline

Move redaction to the device if it is still server side. Replace any manual export process with ticketed, logged flows. Turn on event-driven deletes. Prove that you can find and purge a person’s image when asked.

12.4 Phase Four, Scale With Guardrails

Roll to more stores with the same settings. Do not relax retention rules to hit schedule. Add automated audits that alert if buffers grow or if any service stores raw frames longer than policy.

12.5 Phase Five, Continuous Improvement

Rotate test sets every quarter. Refresh adversarial cases. Review metrics with the privacy board. Publish a short public update. Keep training your teams.

13. Metrics That Show You Are Doing Privacy Right

- Redaction precision and recall on a labeled test set.

- Median and 95th percentile retention time for identifiable images.

- Number of access exceptions, targeted to zero.

- Age distribution of training images, skewed recent and redacted.

- Percentage of perception done at the edge. Aim high.

- Shopper and employee satisfaction from short surveys, not just a complaint log.

- Time to fulfill a data access or deletion request. Keep it short and predictable.

14. Common Anti-Patterns To Avoid

- Keeping raw video “just in case.” That case never arrives. Risk does.

- Expanding access quietly over time. Scope creep is real.

- Debugging in production with ad hoc exports. Build proper tools.

- Handing vendors broad data rights. Write narrow, enforceable terms.

- Using privacy as a marketing slide, not a practiced discipline with metrics.

15. The Business Case For Doing This Well

Privacy is not a tax on innovation. It is a speed boost. When you keep pixels short-lived and tightly redacted, your legal reviews are faster. When you compute at the edge, your cloud bills drop. When shoppers trust your store, your opt-in rates for loyalty programs go up. When employees feel respected, they stay. These are not soft outcomes. They are line items.

16. Conclusion: Build Systems People Would Choose To Live With

Inventory robots can make retail calmer and more reliable. Products are where they should be. Prices match the shelf. Associates spend time serving people, not hunting for mislaid cans. That future is worth building. It demands privacy by design, not privacy by press release.

If you are a retailer, set a higher bar. Capture less. Compute locally. Redact early. Delete quickly. Audit everything. Publish what you measure. Treat Privacy Retail Inventory Robots as a product requirement, not a compliance chore.

Call To Action. If you run retail tech, pick one change from this article and ship it this month. Move redaction to the robot. Cut your working set from seven days to forty eight hours. Add a public metrics page. Small, concrete changes add up. Your shoppers will feel the difference. Your teams will be proud of the system they built. That is the point.

17. Frequently Asked Questions:

- Do Inventory Robots Use Facial Recognition?

No. They do not need it for inventory tasks. If any system proposes it, say no unless you have a lawful, specific, consented reason that passes public scrutiny.

- What Exactly Gets Stored, And For How Long?

Short answer. Redacted crops tied to open tasks for under two days, anonymized training data for under two months, logs for audit longer. Tune the windows to your risk posture. Never keep identifiable images indefinitely.

- Who Can See My Image If I Appear In A Frame?

By policy, only a narrow support group can access unredacted images and only for a documented incident. Associates and managers see task results and redacted thumbnails.

- Can Robots Track Individual Shoppers Over Time?

They could if you design them that way. Do not. Avoid persistent identifiers for people. Use aggregate traffic maps computed on device or on redacted data.

- How Do You Make Sure Redaction Actually Works?

Treat it like a core feature. Build a labeled test set with tough cases. Run it in CI. Track precision and recall. Fail builds that regress. Spot check in stores with privacy board members.

- What About Employee Privacy?

Apply the same rules. No covert scoring from robot footage. If you analyze task completion time, use handheld logs, not body imagery. Engage with staff councils when policies change.

- Are There Alternatives To Cameras For Inventory?

Yes. RFID for tagged items. Weight sensors for smart shelves. Computer vision from fixed shelf cameras with tight cropping. Each option brings tradeoffs in cost, accuracy, and privacy. Pick the least intrusive tool that meets the business goal.